The Great Redirection: How AI Swallowed Science

Billions flow into AI, leaving other disciplines gasping, yet the long game may belong to everyone

AI researchers have often tried to build knowledge into their agents

-Richard Sutton

Science’s New Compass

Every era has its guiding star, the key invention or discovery that reconfigures human thought and shifts the flow of capital. The nineteenth century was driven by steam, the twentieth by electricity, and our own century is defined by algorithms, or rather, the ability to teach machines to learn on their own. Artificial intelligence (AI), once a vague concept confined to science fiction and philosophical debates, has become the new foundation of science.

I think my previous articles (here, here, here, and here) have painted a bleak picture of AI, and perhaps some of those fears still seem justified. Yet it seems increasingly dishonest, or at least one-sided, to continue treating AI as a predator feeding on science. The more one examines, the clearer it becomes that AI is not science’s rival but its catalyst.

Seeking an improvement that makes a difference in the shorter term, researchers seek to leverage their human knowledge of the domain, but the only thing that matters in the long run is the leveraging of computation. These two need not run counter to each other, but in practice they tend to. Time spent on one is time not spent on the other. There are psychological commitments to investment in one approach or the other. And the human-knowledge approach tends to complicate methods in ways that make them less suited to taking advantage of general methods leveraging computation.

-Richard Sutton

Investing in AI research does not reduce funding for molecular biology; instead, it can multiply the potential discoveries, such as new protein folding, new materials being synthesized, and new climatic patterns being understood. Funding AI today is not about abandoning science; it’s about accelerating it.

The pivot, then, was not a choice but an inevitability. When one discipline becomes a force multiplier for all others, resisting investment in it is to handicap oneself deliberately. Just as no sane government of the 1950s would have said, “Let us ration our spending on semiconductors lest mathematics be offended,” today the wise realize that AI is not a rival field to science but the scaffolding upon which science must now climb.

The AI Surge: Data and Dynamics

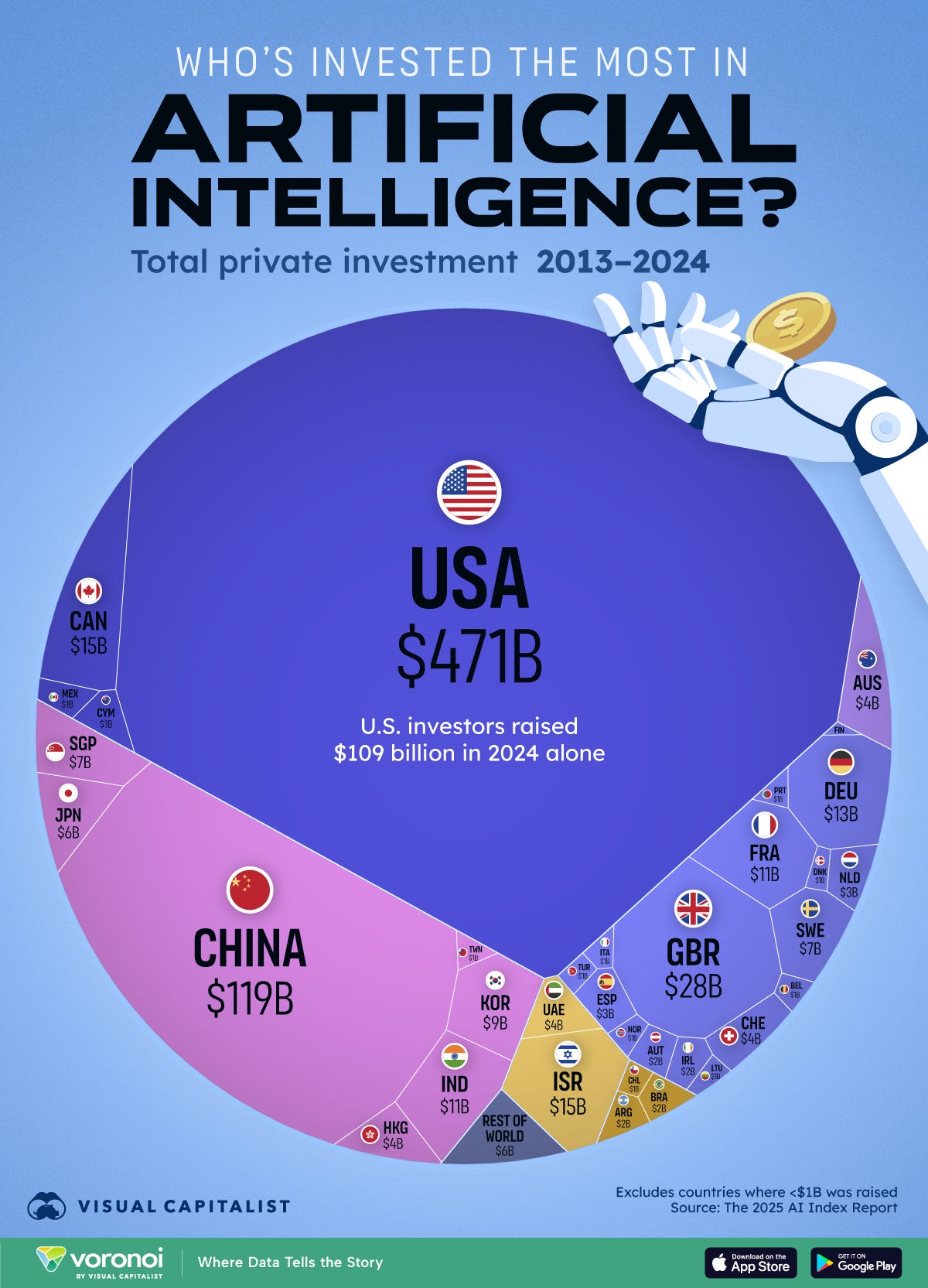

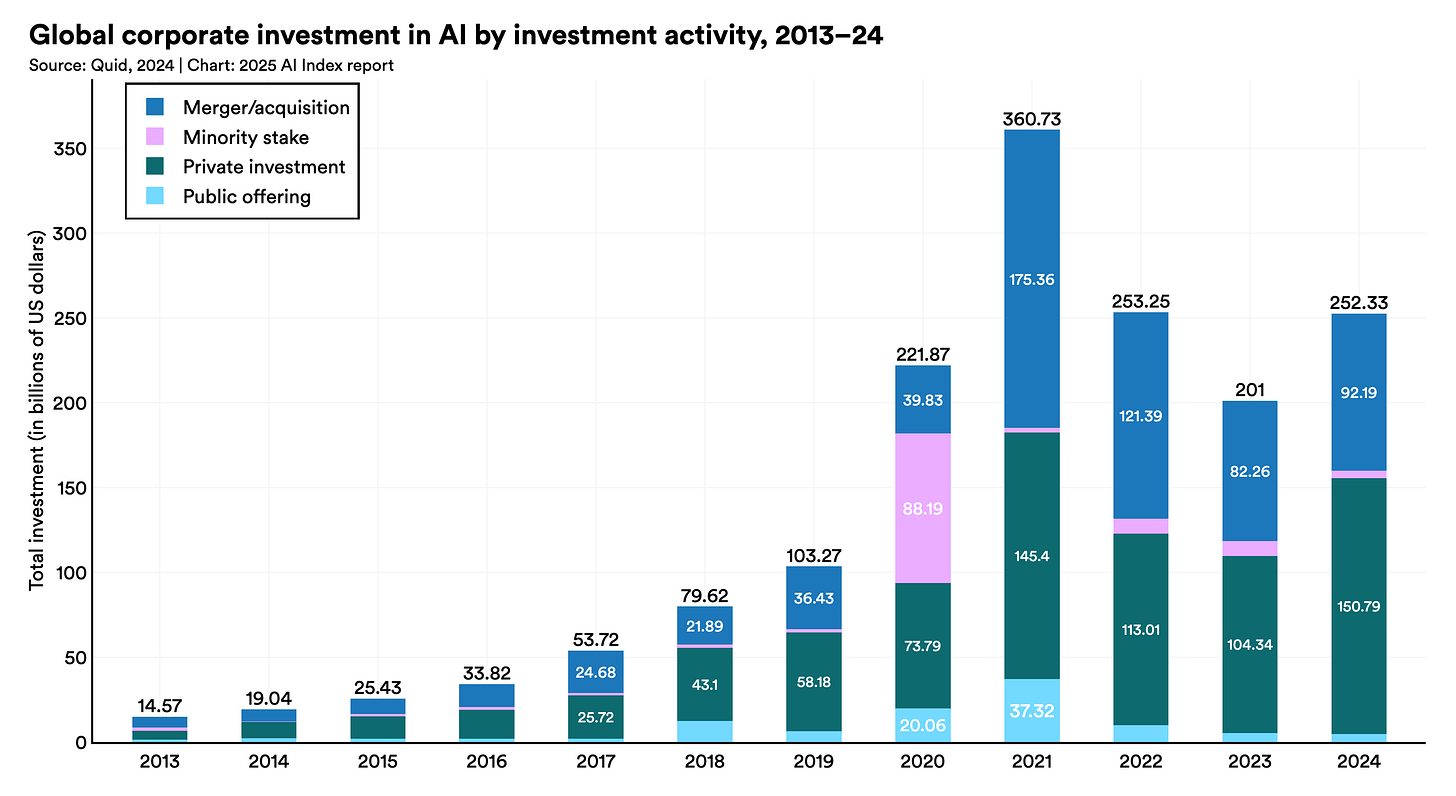

No one needs to be a prophet or even a close accountant to notice the current craze for AI. The numbers, bright and overwhelming like neon signs in Piccadilly Circus, speak loudly enough. In 2024, corporate investment in AI surged to over $250 billion, according to the Stanford AI Index, more than Greece’s annual GDP, and well above what most countries spend on research of any kind. Venture capital has followed just as eagerly: in 2023 alone, AI companies took more than a quarter of all global venture funding, with some “mega-rounds” exceeding $1 billion. What once seemed impossible, that a single field could grab the attention of both Wall Street and Whitehall, is now a common reality.

Governments, always eager to be at the forefront, have expanded their AI investments with a fervor that would make even the Cold War science race seem modest. The United States’ CHIPS and Science Act pledged $200 billion over ten years, with a significant portion dedicated to AI research and infrastructure. The National Science Foundation has allocated hundreds of millions of dollars to AI Institutes focused on areas such as climate modeling, drug discovery, and trustworthy machine learning. Across the Atlantic, the European Union’s Horizon Europe program, with its €95 billion budget, has allocated large portions for “digital, industry, and space”, with AI positioned centrally in its industrial strategy. Meanwhile, China has made AI one of the “frontier technologies” in its 14th Five-Year Plan, committing billions in direct subsidies and establishing whole cities as AI testbeds.

If these numbers seem excessive, it's only because you're viewing them with a different perspective. AI isn't just another field battling for a spot at the research table. It is the core itself, the very system where new ideas are created, developed, and implemented. One dollar invested in AI research today could easily yield ten dollars in return tomorrow: through faster protein folding models, more precise weather predictions, better batteries, and more advanced simulations of physical systems. This kind of leverage is so obvious that ignoring it would be a form of self-sabotage.

History provides a valuable analogy. In the 1950s, the semiconductor revolution shifted large amounts of funding from the niche of pure physics to the bright promise of transistors and integrated circuits. Today, no one laments that shift because the transistor did not hinder physics; it enhanced it. It supplied physics with new tools, new industries, and greater significance. The shift toward AI, rather than representing science being overtaken, should be seen in the same way: not as a passing trend, but as a fundamental reallocation toward a technology that amplifies everything it touches.

Thus, while the sums invested in AI seem staggering and sometimes excessive, if you are an ecologist struggling with limited fieldwork grants or a taxonomist naming beetles on a tight budget, they should be seen as strategic necessities. In the race for discovery, funders are simply backing the technology most likely to advance their goals. And that technology, for the foreseeable future, is artificial intelligence.

AI as a Force Multiplier in Science

It is fashionable, in certain anxious areas of academia, to talk of AI as if it were a cuckoo in the scientific nest, displacing older, nobler fledglings. The truth is more straightforward and, if anything, more exciting: AI is not a rival to the sciences but their most powerful ally. It is the lens that sharpens their focus, the accelerator pushed firmly to the floor of discovery.

Medicine and Biology: In the biological sciences, AI has already transformed the field. DeepMind’s AlphaFold amazed the world in 2021 by predicting the structure of nearly every known protein, an achievement that, using traditional methods, would have taken entire lifetimes and countless labs. Pharmaceutical companies, recognizing this shift, have invested billions into AI-driven drug discovery. Insilico Medicine announced in 2023 that an AI-created molecule had entered Phase I clinical trials, reducing what used to be a decade-long process to just two or three years. By 2030, analysts expect that up to 30% of new drugs might be discovered with significant AI help, a change as revolutionary to medicine as antibiotics once were.

Climate and Environmental Science: Climate science, long hindered by computational limitations, has adopted AI as a game changer. Google’s DeepMind has shown AI weather forecasts that surpass traditional models for short-term predictions, a breakthrough with life-saving potential for flood, hurricane, and wildfire alerts. The European Centre for Medium-Range Weather Forecasts now incorporates AI models into its pipeline, reporting improvements in accuracy at a fraction of the cost of supercomputer time. It’s no exaggeration to say that AI could save more lives in the next decade through better disaster forecasting than any single vaccine program.

Physics and Astronomy: In physics, AI handles the mental labor once carried out by large groups of graduate students. CERN, faced with the petabytes of data generated by the Large Hadron Collider, uses AI algorithms to separate signal from noise, speeding up discoveries in particle physics. In astronomy, AI methods have enhanced telescope images, reconstructed faint signals from distant galaxies, and even helped produce the first image of a black hole. These are not toys; they are essential tools that make understanding the universe possible.

Materials Science and Engineering: Meanwhile, the design of new materials, a field once reliant on trial, error, and endless tinkering, is being revolutionized by generative AI models capable of proposing novel alloys, catalysts, and superconductors. Microsoft and the Pacific Northwest National Laboratory recently reported an AI-assisted discovery of a new material for battery storage, reducing years of experimental work to months. The global market for AI in materials science is projected to reach tens of billions of dollars by the 2030s, a figure that, while promising to investors, also signals new eras of energy storage, cleaner chemistry, and more durable infrastructure.

Social and Economic Sciences: Even the so-called softer sciences have not been immune. Economists use AI models to analyze market dynamics, sociologists examine vast datasets to identify patterns of human behavior, and linguists employ large language models not just for translation but to track the evolution of culture itself. If the human sciences feel uneasy about their new partner, they should remember that the statistical revolution of the 20th century was also initially met with suspicion before it became essential.

Taken together, these examples show a simple truth: AI is not a competitor for research funding but a way to amplify research’s purchasing power. Funding AI means supporting biology, physics, climate science, and more, but faster, deeper, and at previously unimaginable scales. It is the foundation on which all sciences now grow taller.

Why the Pivot Was Inevitable

The late 19th century saw the unstoppable rise of electricity, as governments and business leaders invested heavily in power grids, dynamos, and the first incandescent lamps. By the mid-20th century, semiconductors had taken the lead, attracting government funds, military spending, and private wealth. The Cold War itself can be seen as a story of silicon, ranging from missile guidance systems to the dawn of the microprocessor. By the 1990s and early 2000s, the internet, initially dismissed as a pastime for academics and hobbyists, became the new hub of investment, fueling dot-com bubbles and, as it matured, becoming the backbone of the modern world.

Each of these waves followed the same pattern: initial skepticism, quick demonstration of transformative usefulness, then a surge of funding so large that competing fields complained of neglect. The complaints were not entirely unfounded. In the 1970s, physicists lamented the flood of money for computing hardware at the cost of “pure” theoretical research. In the 1990s, social scientists complained about the gravitational pull of internet research grants, which seemed to skew entire funding agencies. Yet, in hindsight, who now argues that those investments were wasted? Who, with a straight face, wishes that DARPA had not funded the ARPANET, or that IBM and Bell Labs had been more cautious with their transistor budgets?

AI is the latest in this lineage, but with a crucial difference. Unlike electricity, semiconductors, or the internet, AI is not just a sectoral technology. It is versatile in its applications, acting as an intellectual parasite that attaches to any host discipline and enhances its power. Where electricity provides light and power, AI offers foresight and speed. Where semiconductors reduced the size of machines, AI shortens the gap between hypothesis and outcome. Where the internet connected people, AI connects ideas, datasets, and methods in ways that were previously unimaginable.

Thus, the so-called “pivot” of funding is less a betrayal of scientific diversity than an acknowledgment of what is inevitable. The numbers support this: global public and private spending on AI R&D is expected to surpass $300 billion annually by 2026, outpacing the combined research budgets of most nations. China’s latest Five-Year Plan allocates tens of billions for AI infrastructure, while the United States’ National AI Initiative claims an increasing portion of the federal science budget. The European Union, not to be outdone, has integrated AI into everything from agriculture to defense research. The trend is clear.

The realization, then, is not that AI has “stolen” the spotlight, but that the spotlight was always going to shift. Science is not a democracy of equal votes; it is a marketplace of transformative potential. When a technology emerges that promises to accelerate all others, the laws of intellectual economics dictate that money will follow, and it has.

The Risks of Overconcentration

Of course, no sensible person would deny that an excess of funding in one area causes a shortage in another. History’s major rebalancing always comes with a cost. The current obsession with AI has created real concerns, and they are worth discussing before they are dismissed.

The first issue is the charge of neglect. Biologists complain that core wet-lab science is being overshadowed by computational projects; physicists mutter that experimental apparatuses, which cannot be digitized, risk being underfunded; humanists warn that entire faculties may be reduced to support roles for data science. On the fringes, there is evidence to support these claims. A 2024 UNESCO report noted that, while AI-related publications increased nearly 700% between 2015 and 2023, funding for traditional earth sciences, chemistry, and even some areas of medical research stagnated or decreased in real terms. A mid-career climatologist might reasonably ask whether their work on ice-core drilling is any less urgent than training yet another predictive model.

The second concern is ethical. Critics argue that focusing resources on AI risks worsening the problems it aims to address. An overfunded AI industry may speed up surveillance, reinforce biases, and solidify monopolies held by a few large tech companies. If we make AI the central focus of scientific research, do we risk creating a monoculture where all inquiries go through the same limited perspective? History shows that monocultures, whether in crops, economies, or ideas, are prone to collapsing suddenly and dramatically. We also see AI being used in warfare, reducing human lives to 0s and 1s when it comes to deciding who to kill and who to spare, which is an unsettling future to look into, and as it becomes more effective in doing so, its use in warfare will only increase going forward.

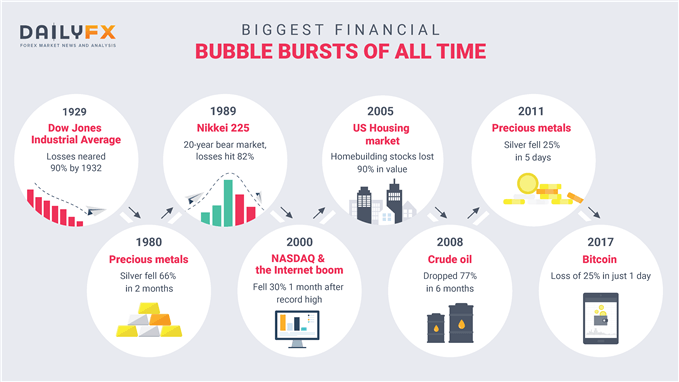

A third concern is more straightforward: the risk of a hype cycle. Those with long memories remember the “AI winters” of the 1970s and 1990s, when exaggerated promises exceeded actual progress and funding was abruptly cut off. Some ask whether we are just inflating another bubble. When the next recession hits, will AI research collapse like the dot-com bubble of 2000, leaving labs stranded and postdocs unemployed? I’ve personally witnessed the collapse of the nanotech bubble, and it is indeed brutal, but its scale is so much smaller than the AI bubble, whose burst I expect to be catastrophic, to put it mildly.

These objections are not trivial. They act as an essential counterbalance to the unchecked optimism of policymakers and entrepreneurs. Ignoring them would lead to arrogance, and arrogance is the main enemy of science. However, as with previous shifts in scientific funding, the core of these critiques, though serious, does not negate the overall certainty of the shift. Instead, they highlight the importance of maintaining balance and vigilance as we move into the next phase.

The Case for Balance: Complementarity, Not Competition

The problem with the Jeremiads against AI is not that they are wrong to warn of distortion, but that they misunderstand what distortion actually is. Research funding has never been evenly distributed, nor should it be. The telescope didn't wait for equal consideration of the astrolabe, nor did the microscope pause to give alchemy a fair chance. Progress is not fair; it is cumulative and often mercilessly selective.

The genius and the danger of AI lie in its promiscuity. It does not mainly replace other sciences but rather infiltrates them. Already, AI accelerates drug discovery, where machine-learning models analyze billions of potential compounds in days; a process that used to take decades. In physics, AI is integral to the search for exoplanets, parsing terabytes of data produced by telescopes that no human eye could examine. Climate science, which risks being overshadowed by the allure of AI, has quietly adopted it as well: neural networks now enhance storm-path predictions and long-term climate models with remarkable accuracy, even in the humanities, that last stronghold of anti-technological sentiment, AI is making progress by assisting with textual analysis, translation, and the reconstruction of lost languages.

The point isn't that these disciplines should submit to AI as a new overlord, but that AI’s true promise is as a catalyst. A dollar spent on AI research doesn't necessarily come at the expense of climatology, oncology, or particle physics; it often makes them more effective. When the balance is right, AI becomes less like an imperial power draining resources and more like a multiplier, an unseen infrastructure through which all other sciences become sharper and more powerful.

This is why the language of “competition” between AI and other sciences is misleading. It promotes a zero-sum mindset that history contradicts. The transistor did not eliminate physics; it transformed it. The internet did not bankrupt medicine; it enhanced it with databases, telehealth, and genomic mapping. In each case, the initial surge of funding toward a single technology eventually spread outward, benefiting all areas. To oppose the shift to AI based on balance is therefore to misunderstand what balance truly means. The real argument should be for complementarity to ensure AI is integrated into other disciplines instead of developing as an isolated monoculture.

If policy-makers are wise, they will frame funding not as a matter of AI versus X, but AI within X. The challenge is not to slow down the juggernaut but to steer it through the fields of medicine, climate, energy, and culture, where its power to multiply effects can be most beneficial. In this light, the shift towards AI stops being a threat and instead becomes an opportunity for a new scientific integration.

The Shape of Things to Come

When I previously wrote about AI, my tone was more Cassandra than Prometheus. I saw in it the beginnings of surveillance states, intellectual monocultures, and the gradual replacement of human judgment by opaque algorithms. Those threats still exist; they still hang over us, and we would be foolish to dismiss them. At the same time, it would be equally foolish to ignore another undeniable truth: the shift of research funding toward AI is not just a passing trend, but a structural certainty of modern science.

The remarkable versatility of the technology makes resistance futile. AI isn't just a discipline in a narrow sense but a method, a way of recognizing patterns and making inferences that runs through every other field. To suggest otherwise is to mistake surface appearances for the true essence. Funding agencies have recognized this, perhaps more instinctively than they realize. By prioritizing AI, they aren't so much narrowing the scope of science as wisely betting that all other sciences will eventually benefit from its influence.

None of this means we should become complacent. Monocultures, whether in plants or ideas, tend to lead to disaster. We need to make sure AI is not a locked-off fortress but a foundation that supports progress in medicine, physics, biology, and even the humanities. Achieving this will require vigilance, careful governance, and a commitment to substance over hype. However, these are management issues, not existential threats.

The larger arc curves in a different direction. Instead of signaling the end of the sciences, the focus of funding towards AI could speed up its progress, as long as it is appropriately integrated. What once seemed like a zero-sum struggle now looks more like a relay race: AI takes the baton not just to win alone, but to extend the race for everyone.

If there is a lesson here, it is that doom and hope are rarely as far apart as they seem. Yesterday’s threat becomes today’s tool. The shift to AI, despite its distortions, may eventually be remembered not as a narrowing of human exploration but as the moment it gained a sharper edge.

☕ Love this content? Fuel our writing!

Buy us a coffee and join our caffeinated circle of supporters. Every bean counts!

Oh dear. I do apologize, truly, but I feel obligated here to punish you with yet another rendition of my chronic rant.

You write, "The truth is more straightforward and, if anything, more exciting: AI is not a rival to the sciences but their most powerful ally. It is the lens that sharpens their focus, the accelerator pushed firmly to the floor of discovery."

This concept is really bad engineering, as it ignores the most fundamental element of the equation, the maturity level of the human beings who will obtain the powers thus delivered. This whole "accelerator pushed to the floor" concept is like a really clever car mechanic who upgrades his car so it can go 800mph, but then ignores upgrading his tires. So his car crashes, and he dies. Really bad engineering.

Yes, I know you threw in few sentences to try to cover this, and have written articles skeptical of AI. But the fact that you wrote this article demonstrates you still don't truly get it. And that wouldn't matter, except that your failing here is representative of nearly the entire culture, and especially the scientific community.

Good and bad things have always emerged from the knowledge explosion. The OLD and OUTDATED way to look at this is that the good and bad will balance each other out, and thus we can keep moving forward. Old. Outdated. Over. Yesterday. Obsolete thinking!

The new reality is that as the scale of powers available to us grows, the bad increasingly obtains the ability to erase the good. Nuclear weapons illustrate the new reality perfectly. They also illustrate that this is not actually a new reality, but has been with us since the 1950s. The 1950s. And the "experts" STILL do not grasp it, and are STILL living in the 19th century intellectually. This is very much a "the emperor is wearing no clothes" situation.

The great progress that AI powered research promises is a myth, because sooner or later this dramatic acceleration of the knowledge explosion will produce one or more powers that the human race can not successfully manage, and then all the wonders to emerge from the knowledge explosion will be erased. If that sounds like hysterical alarmist speculation, don't forget, that could literally happen this afternoon. And if you still think I'm being hysterical, please keep in mind, we are headed straight towards WWIII in Europe as we speak.

And now, in fairness, to debunk my own rant above. Nothing that anybody might write on this topic is going to save us. The philosophical shift that is now required of us is simply too big for humanity to grasp with reason alone. And the outdated status quo group consensus that most people turn to as their chosen authority is far too entrenched to be dislodged by mere words. We will come to understand how dangerous our outdated relationship with knowledge is, but we will not learn it through reason, but through pain. It is what it is.